CURRENT RESEARCH

Hybrid Multicore and Exascale Computing for Scientific Applications

Funded by NSF, DOE/NNSA, and AFOSR

Hybrid multi-core processors (HMPs) – processors consisting of multiple cores and GPUs – are dominating the landscape of the next generation of computing from desktops to extreme-scale systems. We are developing algorithms and software that can exploit these architectures for a variety of computational science applications.

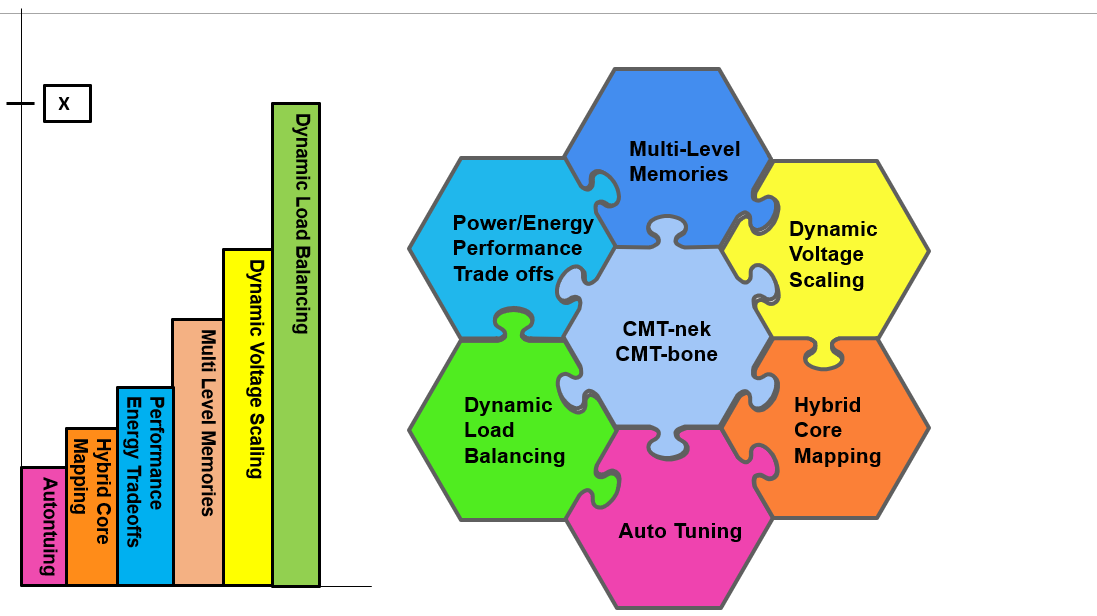

Our research focuses on the following:

- Performance energy tradeoffs: Energy consumption is a critical issue in large-scale data centers and next-generation supercomputers because their energy requirements for powering and cooling have become comparable to the costs of acquisition. We have developed novel algorithms and software to reduce the energy requirements of complex workflows on these machines by using reconfigurable caches and dynamic voltage scheduling. This research has resulted in several important contributions in the area of managing energy for multicore and manycore machines, which were reported in a co-authored book (with Wang and Mishra), Dynamic Reconfiguration in Real-Time Systems, published by Springer Verlag.

- NNSA PSAAP Center for Combustible Turbulence: We have developed exascale implementable software for complex multiscale problems that combines three complex physics phenomena: compressibility, multiphase flow, and turbulence. As a co-PI and computer science lead of the center, I have made the following key contributions:

- Dynamic load balancing of Euler-Lagrange multiphase simulations on millions of MPI ranks for particle-particle collision and particle-fluid interpolation algorithms, where my group has developed algorithms that automatically detect when to perform load balance and remap the moving particles and the underlying mesh data structures. Our dynamic load balancing strategy results in an order of magnitude reduction in the overall computational cost.

- Data decomposition and load balancing algorithms for hybrid multicore processors to derive near-optimal workload decomposition on CPU cores and GPU cores. We derive Pareto optimal curves to help the user choose a system configuration based on optimization goals, while the system configuration includes automatically choosing the optimal frequency for CPUs and GPUs.

- Multiple GPU SAR reconstruction: We have developed novel sequential and parallel algorithms for real-time change detection using SAR images on an airborne device. Back-projection is a key technique that is used for SAR-based image reconstruction. When image frames are used for change detection, the computational burden can be mitigated by rendering areas of low or no activity in lower resolution, thereby reducing the total amount of computation by an order of magnitude; however, this requires dynamic load balancing. We have achieved several hundred gigaflops on a Nvidia GPU-based HMP and 100+ teraflops on a 128-GPU processor system. The key computer science challenges that we addressed include intelligent task-to-core mapping, energy-aware task scheduling, minimization of communication, and synchronization overhead.

- GPU-based libraries for sparse matrix factorization (Sparsekaffe): Sparse direct methods (QR, Cholesky, and LU factorization) form key kernels to many solvers, including sparse linear systems, sparse linear least squares, and eigenvalue problems. Consequently, they form the backbone of a broad spectrum of large-scale computational science applications. We have developed highly optimized software libraries for parallel sparse direct methods on single GPU and multiple GPU systems. Our algorithms and software exploit the irregular and hierarchical structures to achieve orders of magnitude gains in computational performance. They leverage multiple GPU streams and overlap communication and computation to achieve high performance.