CURRENT RESEARCH

My current research interests are (1) high performance and parallel computing with a focus on energy efficiency and (2) big data science with a focus on data mining/machine learning algorithms for spatiotemporal applications.

Machine Learning based Algorithms and Software for Transportation and Smart Cities

Funded by NSF Cyber Physical Systems, NSF Smart Communities and Florida Department of Transportation

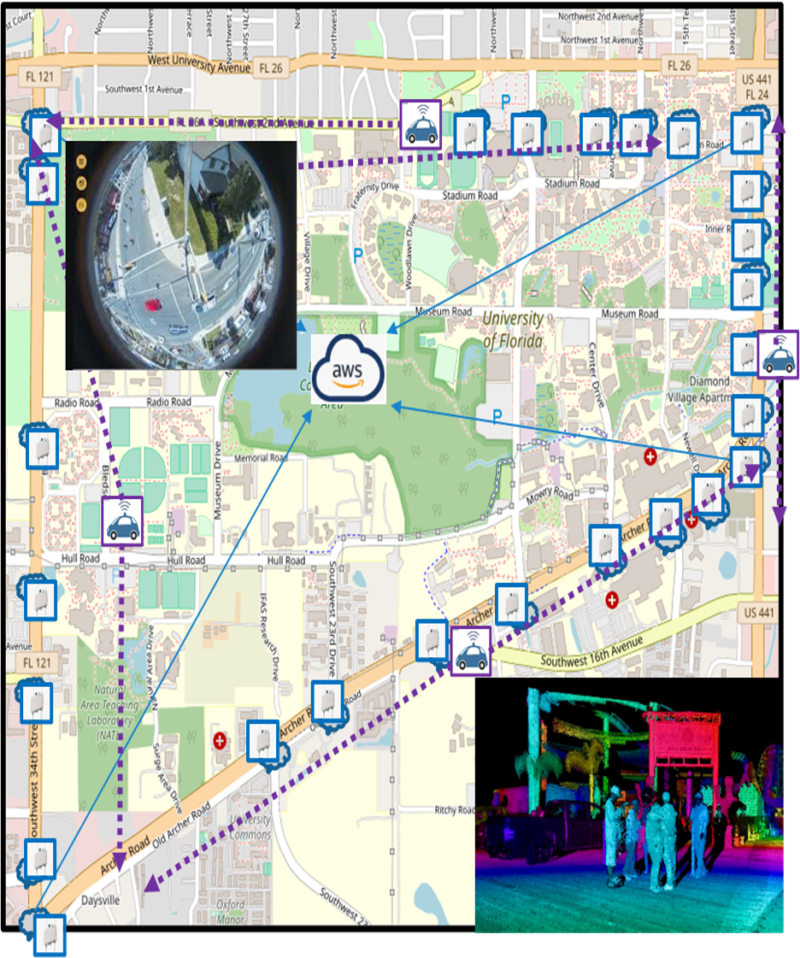

Transportation: Mitigating traffic congestion and improving safety are the cornerstones of transportation within smart cities. Current practices collect and analyze data from sensors and video processing offline and thus are limited in proactively reducing traffic fatalities and preventable delays at intersections. I lead the team that has developed real-time machine learning algorithms and software to analyze video feeds from cameras and fuse them with ground sensor data to generate real-time detection, classification, and space-time trajectories of individual vehicles and pedestrians. Space-time trajectories are used to improve the safety and efficiency of the intersection by determining potential collisions, or “near-misses,” in real-time. We are currently working with the City of Gainesville and the City of Orlando to use this software to quantitatively measure and rank intersections by safety, and to transmit information about unsafe behavior to connected vehicles and pedestrians in real-time using new communication technologies. We are also developing signalized intersection control strategies for jointly optimizing vehicle trajectories and signal control at every intersection thereby improving throughput and reducing wait times. Each of these advances and algorithms are presently being field tested at intersections in Gainesville. My overall effort in smart transportation has received significant media coverage and is geared toward positioning Gainesville as a leading edge 21st century smart city.

Data Science for Health Care Applications

Funded by National Institute of Aging and Texas Medicaid

Health Care: Understanding the impact of intervening health events (e.g., episodic falls, injuries, and hospitalizations) is an important emerging scientific area in geriatrics and gerontology. Currently most of the data available is after the event and limits the development of effective interventions. I have led the development of a sustainable research infrastructure using a novel smart watch app called ROAMM (Real-time Online Assessment and Mobility Monitor). The app offers continuous and long-term connectivity and bidirectional interactivity with programmable measurements – a necessity for capturing data surrounding an intervening health event. We have demonstrated the use of this framework to capture activity and mobility patterns of free-living adults and their reported outcomes, including pain and anxiety levels for a variety of health care studies. This technology is currently being productized and will be used for a variety of clinical trials over the next year.

Hybrid Multicore and Exascale Computing for Scientific Applications

Funded by NSF, DOE/NNSA, and AFOSR

Scientific Computing: Hybrid multi-core processors (HMPs) – processors consisting of multiple cores and Graphical Processing Units (GPUs) – are dominating the landscape of the current generation of computing, from desktops to extreme-scale systems. Much of the previous work in exploiting these architectures was on applications with a static structure, and array structures with no consideration to energy requirements. My work demonstrated that for a variety of applications with dynamic computational structure and sparse data sets (compressible turbulence, synthetic aperture radar, and sparse matrix factorization), efficient parallelization can be achieved, and software can be developed that provide performance/energy tradeoffs. The latter is an important issue both for battery powered appliances and exascale supercomputers. This work made several important contributions in real-time scheduling of irregular and hierarchical structures, intelligent task-to-core mapping, energy-aware task scheduling, dynamic voltage scaling and parallelization to millions of cores. Besides making the applications run faster, my work directly impacts climate change and energy efficiency as computers today use 10+% of the total power consumption.

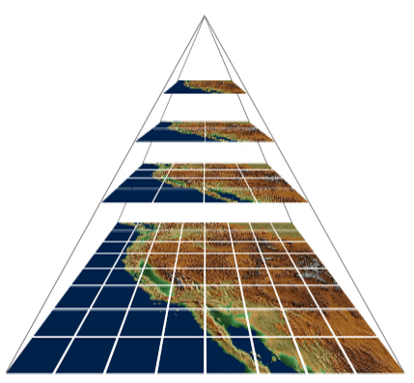

Machine Learning for Data Reduction of Scientific Applications

Funded by DOE

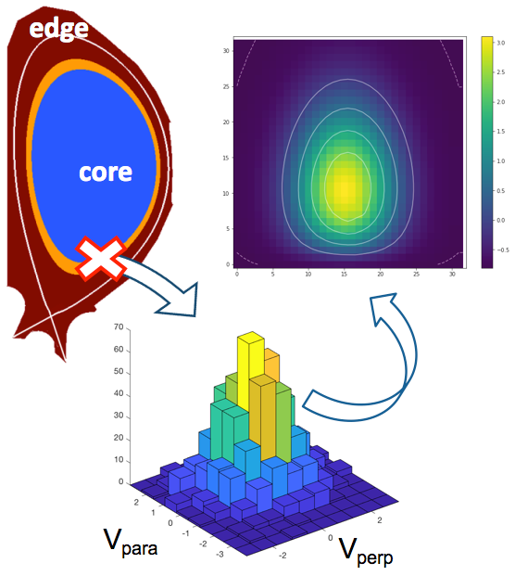

Scientific applications from high energy physics, nuclear physics, radio astronomy, and light sources generate large volumes of data at high velocity and are increasingly outpacing the growth of computing power, network, storage bandwidths and capacities. Furthermore, this growth is also seen in next-generation experimental and observational facilities, making data reduction or compression an essential stage of future computing systems. Scientists are principally interested in downstream quantities called Quantities of Interest (QoI) that are derived from raw data. Thus, it is important that the methods quantify the impact of data reduction not only on the primary data (PD) outputs but also on QoI. The ability to quantify these with realistic numerical bounds is essential if the scientist is to have confidence in applying data reduction.

In this talk, we will discuss our work on machine learning based techniques that provide guarantees on PD and QoI. Our approach is based on a key observation that neural networks with piece-wise linear activation units (PLUs) can be represented as instance- specific linear operators. Experimental results on scientific datasets on fusion, climate and CFD simulations show that our methods can successfully satisfy user-provided error bounds at an instance level while achieving high reduction. Additionally, our reduction methods can be easily parallelized and have low computational overhead making it highly practical for real-time reduction.

(Joint work with Anand Rangarajan and Scott Klasky)